0 Introduction

As one of the most advanced active microwave imaging radar systems, synthetic aperture radar (SAR) is able to obtain high resolution images of the observed targets[1], which exposes huge significance in civil and military application fields. The research on SAR systems started in 1950s, where the early SAR imaging systems worked in a single-frequency and single-polarization mode[2]. With the rapid development of SAR imaging system, polarimetric SAR (PolSAR) technology which adopts a multi-channel and multi-polarization working mode, has evolved dramatically in the past several decades[3].

PolSAR systems usually transmit and receive horizontal and vertical polarimetric waves with two antennas, obtaining backscattered echo information in four polarization combinations (HH, VV, HV, and VH)[4]. PolSAR can obtain complex scattering matrix of the measured medium, which involves various types of information, including the amplitude, phase, polarization and scattering entropy etc. By effectively interpreting the gathered information, we are able to accurately retrieve the physical, geometric and dielectric properties of the observed targets. PolSAR images have been extensively applied in image interpretation tasks, such as image classification, target recognition and target detection, among which image classification plays a critical role. The objective of PolSAR image classification is to assign class label to each pixel in PolSAR images[5]. The classification results can not only be directly output to end users for final decision, but can also offer auxiliary support for consequent higher level tasks, such as the target detection and recognition. PolSAR image classification has been widely applied in various fields, including urban planning, agriculture assessment, environmental monitoring and military surveillance etc.

PolSAR image classification is a difficult remote sensing task, which is due to below reasons. Firstly, SAR acquires images under a coherent imaging mechanism which is totally diffe-rent from optical imaging systems, leading to its poor readability and scene comprehensibility. Secondly, PolSAR data provides more abundant yet complex information than single-polarized SAR data, adding to the difficulty of image interpretation. Therefore, extraction of strong features to discriminate different terrains and design of effective classification models have always been research hotspots in the field of remote sensing.

SAR image classification was originally dependent on human visual recognition. With the development of computer science, machine learning-based PolSAR image classification approaches have become the mainstream methods in recent years. Multiple machine learning methods, such as Bayesian method[6], k-nearest neighbor[7], neural network[8-9], support vector machine (SVM) [10-12], spectral clustering[13], discriminative clustering[14] etc, have been employed to solve the PolSAR image classification issue.

However, all the above methods are based on handcrafted features. Therefore, the classification performance of them is greatly dependent on feature extraction, which usually takes significant efforts and requires deep domain knowledge. The extracted features may not be suitable for an arbitrary classifier, and thus the classifier has to be selected carefully[15-16]. Recently, the booming development of deep learning paved a new way for feature extraction, and further contributes to PolSAR image classification. Its advantages lie in two aspects:

1) Compared with conventional methods using handcrafted features, deep neural networks can automatically learn abstract and data-driven polarimetric representations, exhibiting strong discriminating and generalization abilities in classifying different terrains.

2) In addition to automatic feature extraction, deep neural networks also have an inherent capacity to hierarchically encode spatial information, which is particularly important for land co-ver classification since spatial patterns and relationships are commonly involved in real world terrains.

In recent years, deep learning-based PolSAR image classification has attracted more and more attentions, and a great number of approaches have been proposed. Various deep network structures, such as deep belief networks(DBN)[17], convolutional neural networks(CNN)[15,18], convolutional autoencoder(CAE)[19], recurrent neural network(RNN)[20], generative adversarial networks (GAN)[21], have been exploited in PolSAR image classification task.

1 Deep Learning-based PolSAR Image Classification

According to whether and how annotations are used in the classification process, PolSAR image classification methods can be roughly divided into supervised methods, unsupervised methods and semisupervised methods. The supervised algorithm leverages the labeled pixels to train a classifier and then apply it for the prediction of the remaining pixels. Unsupervised algorithms do not use any labels in the classification process. The semisupervised classification algorithm uses both labeled and unlabeled data to improve the classification performance and generalization abi-lity. Except for the above three categories, active learning is another special branch of machine learning paradigms, which aims at minimizing expert involvement during the annotation process, by iteratively discovering the most informative set of samples for manual annotation.

Next, we will respectively review the application of the above four categories of deep lear-ning-based approaches in the classification of PolSAR images in recent years.

1.1 Deep Supervised Methods

Supervised methods use a number of labeled pixels to train a classifier, and then the learned classifier is applied for predicting the remaining pixels. Among these methods, a training set composed of labels in different classes should be chosen in advance and classifiers are then trained by means of different learning algorithms. After that, the learned classifiers are used to the test set. The research on supervised methods mainly focuses on the classification and feature extraction approaches. For supervised algorithms, if the chosen training set is able to cover all terrain types and properly express the terrain characte-ristics, they can achieve more precise and reliable classification results than unsupervised algorithms. This means that the performance of supervised algorithms largely relies on the selection of a good training set with ground-truth class labels.

Recently, deep learning technique has been introduced to supervised PolSAR image classification and achieved promising performance, among which CNN is the most extensively studied one. We will show the structure of CNN and RNN, and the application of them in following subsections.

1.1.1 Convolutional Neural Networks

A typical CNN consists of convolutional la-yers, pooling layers, fully connected layers and an output layer. The network layers are structured in a cascaded manner, where the output of the previous layer acts as the input of the latter one[16]. The input of the whole network is usually the raw data or low-level features. Based on this deep architecture, CNN can learn abstract and data-driven feature representations from the input. The training of CNN includes forward pro-pagation and back propagation processes. A loss function is generally defined to measure the difference between the network output and the ground truth class labels, which is a combination of weight parameters of network layers. By minimizing the loss function via optimization algorithms, such as gradient descent, the network parameters are progressively updated until convergence.

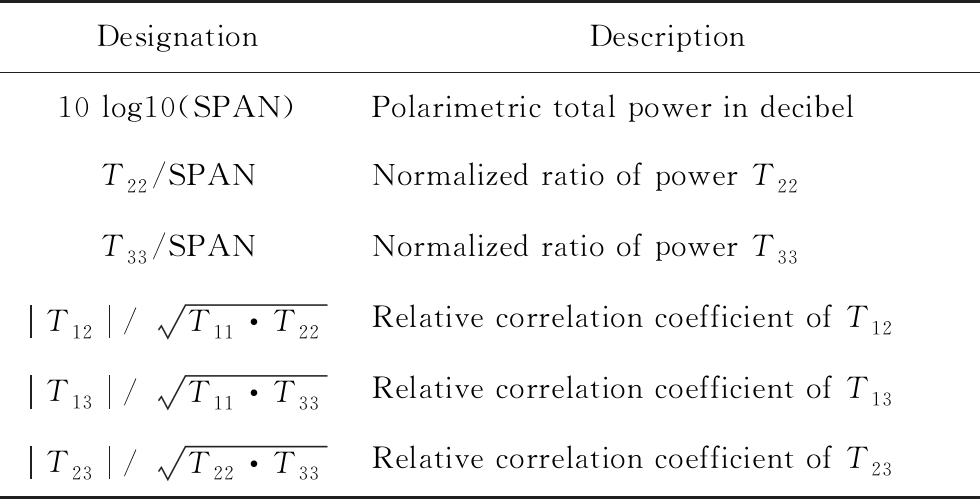

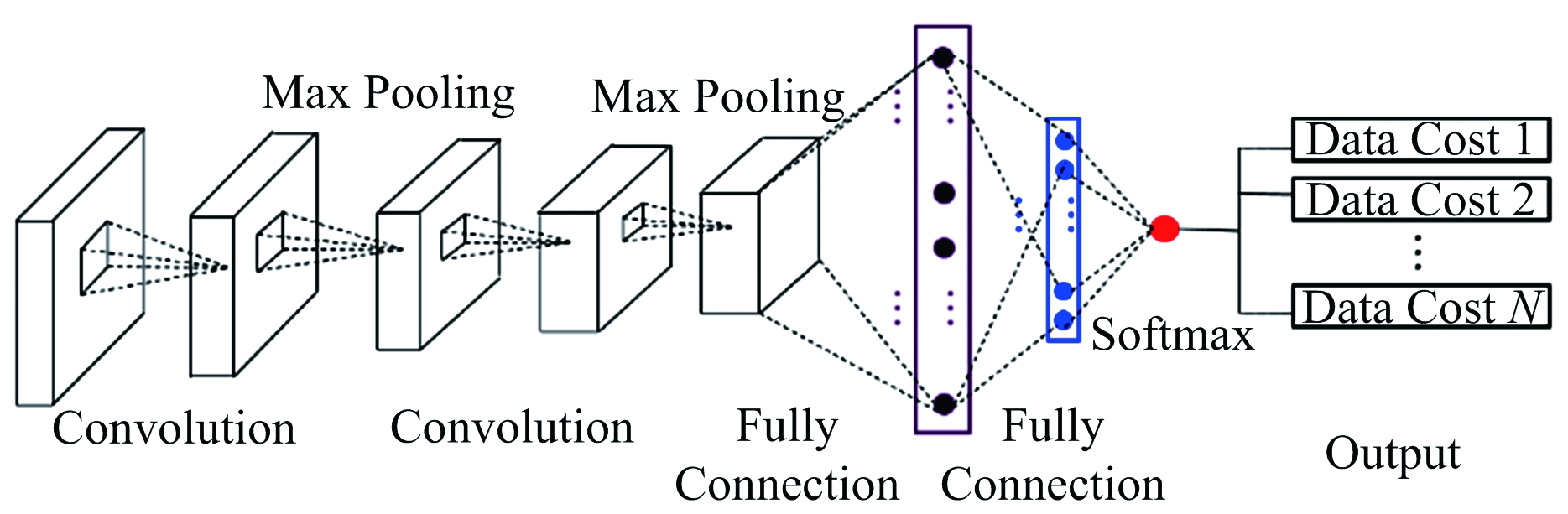

Zhou et al.[15] first proposed a CNN-based PolSAR image classification method, where hierarchical polarimetric spatial features are automa-tically learned with a 6-dimensional real vector derived from the T matrix of PolSAR data, as shown in Table 1. The employed network contains two convolution layers interleaved with two max-pooling layers, two fully connected layers, and a Softmax classifier connected to the output, as shown in Figure 1. Stochastic gradient descent approach is utilized to train the network.

Table 1 Polarimetric raw features

DesignationDescription10 log10(SPAN)Polarimetric total power in decibelT22/SPANNormalized ratio of power T22T33/SPANNormalized ratio of power T33T12/T11·T22Relative correlation coefficient of T12T13/T11·T33Relative correlation coefficient of T13T23/T22·T33Relative correlation coefficient of T23

Figure 1 CNN structure

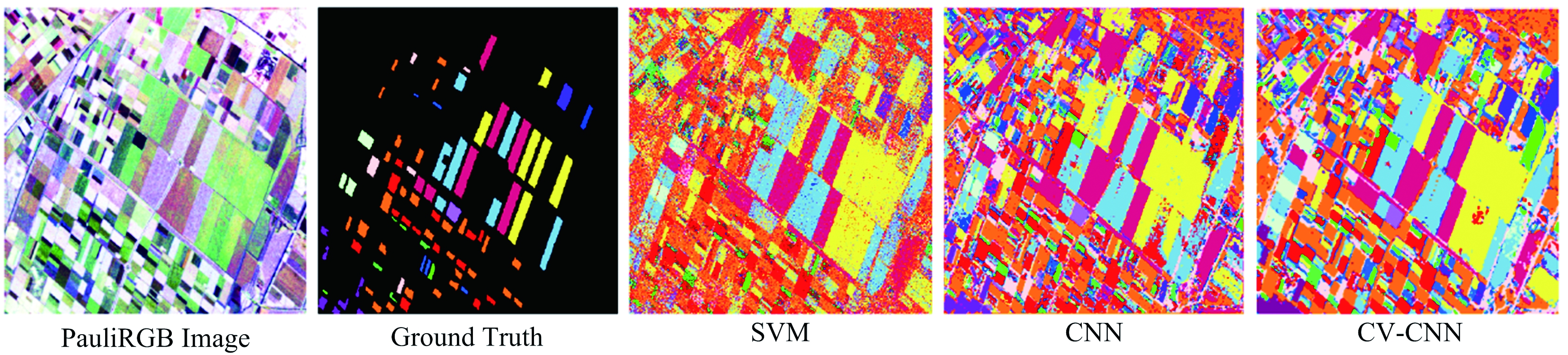

Given the complex feature of polarimetric data, Zhang et al.[22] further extended the real va-lued CNN (RV-CNN) to complex valued CNN (CV-CNN) which utilizes both the amplitude and phase information of complex SAR imagery. A complex back propagation algorithm based on stochastic gradient descent is derived for CV-CNN training. Experiments with the benchmark data sets show that the classification error can be further reduced with CV-CNN instead of conventional RV-CNN with the same degrees of freedom.

Except for the above two representative CNN-based PolSAR image classification methods, other promising CNN-based classification methods were proposed as well. Li et al.[23]

combines sparse coding with CNN, where sparse coding is used as the down-sampling method to reduce the size of image as input of CNN. Experiments show that the proposed method effectively reduces the time consumption and memory occupation. Dong et al.[24] performed an automatic differentiable architecture search on CNN for hyper-parameter optimization with high efficiency, where the architectures obtained by searching have better classification performance than handcrafted ones. Yang et al.[25] presented a CNN-based feature selection method for PolSAR image classification, which avoids random operating and improves the selection efficiency.

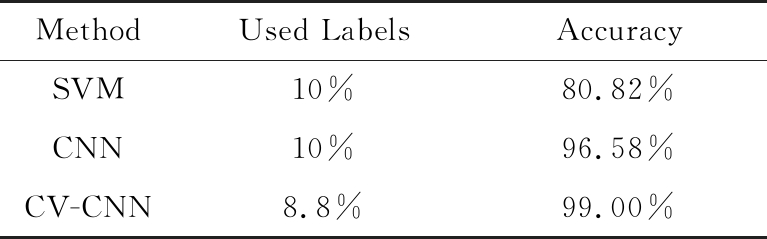

Figure 2 illustrates the PauliRGB image, ground truth class labels and the classification results with SVN, CNN and CV-CNN on an AIRSAR Flevoland area dataset respectively. Table 2 shows the numerical results of the three compared methods. The column “used labels” denotes the proportion of labels used in training.

Figure 2 The comparison of SVM, CNN and CV-CNN on Flevoland area data set

Table 2 Numerical results of compared methods

MethodUsed LabelsAccuracySVM10%80.82%CNN10%96.58%CV-CNN8.8%99.00%

From Figure 2 and Table 2, we can find that compared with state-of-the-art shallow machine learning methods, the deep learning-based CNN and CV-CNN achieve better agreement with the ground truth class labels. Moreover, the class label maps of CNN and CV-CNN present favorable visual effects with better contextual consistency and clearer label boundaries. The numerical results show obvious improvement of the deep learning-based methods.

1.1.2 Recurrent Neural Network

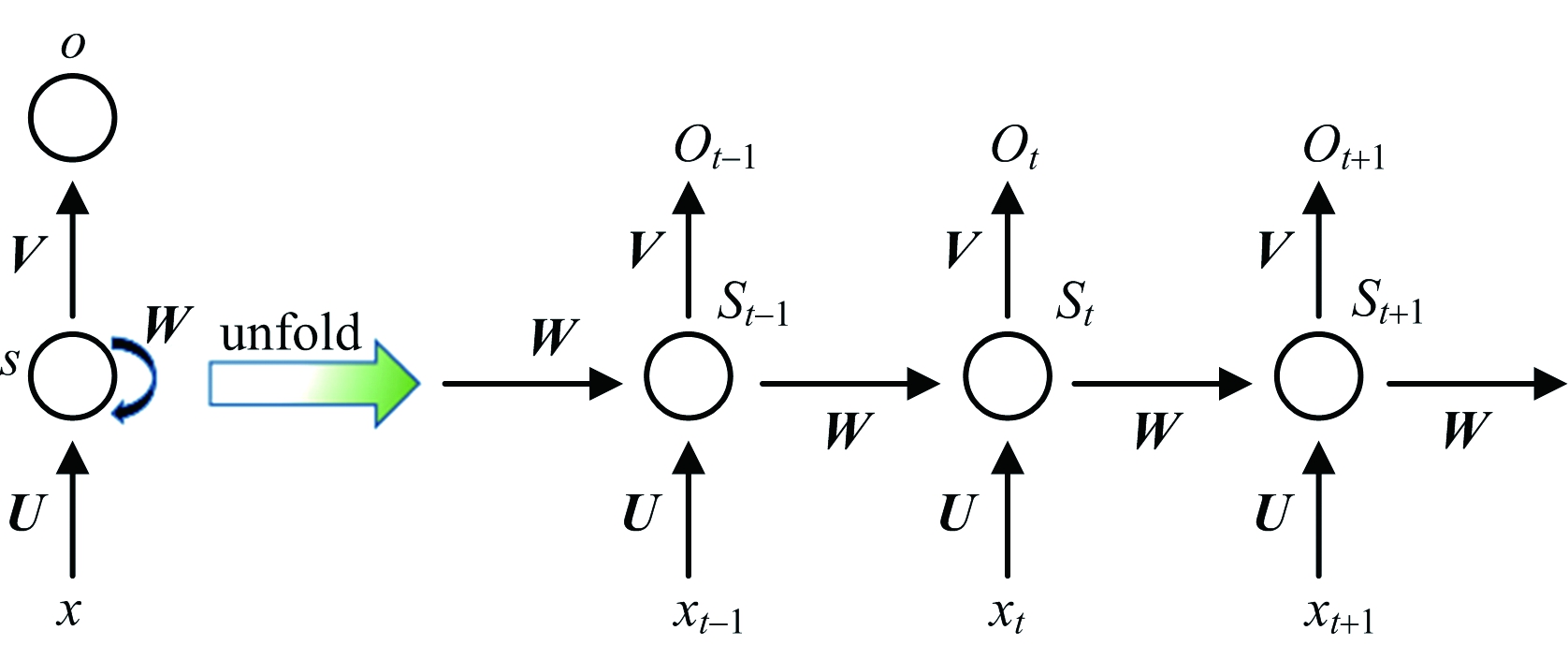

Compared with common multi-layer neural networks, RNN adds horizontal connections between the hidden layer units[26]. The input for each hidden layer involves not only the raw input of the current time but also the hidden layer output of the previous temporal point, which enables the memory function of neural networks. Figure 3 shows a typical architecture of RNN.

Figure 3 A typical RNN structure

Long short term memory networks(LSTM) are a special kind of RNN, which are explicitly designed to avoid the long-term dependency problem via explicitly designed gates, i.e., input gate, output gate and forget gate. These gates effectively control the influence of current memory unit on the consequent hidden layer[27].

Ni et al.[28] introduced LSTM learning strate-gy in PolSAR image classification, which integrates the LSTM network with the conditional random field (CRF) model. The LSTM networks learn both polarimetric and spatial features from the pixel sequences consisting of neighboring pi-xels, where the discrimination of pixels can be improved. Wang et al.[29] also explored LSTM method in classifying PolSAR data, which combines convolution and LSTM to form convolution LSTM (ConvLSTM). Experimental results show that ConvLSTM which uses the sequence of polarization coherent matrices surpasses conventional CNN.

For deep supervised methods, if the selected training pixels are abundant and properly characterize all the terrains, they could obtain accurate and reliable classification maps. However, this means that the performance of the deep supervised methods is heavily dependent on the choice of training set. If the labels are scarce, or the chosen training sets can’t cover all terrain classes, the classification results would be unsatisfactory and unstable.

1.2 Deep Unsupervised Learning

Different from supervised classification methods, unsupervised methods aims to classify all pixels without the help of any manual annotations. Most traditional unsupervised PolSAR ima-ge classification methods are based on target decomposition and polarimetric data distribution theories. They mostly start from an initialization which is generated by executing an initial segmentation, and then perform classification according to different strategies derived from diverse PolSAR data statistical laws[14]. The initial segmentation are usually based on target decompositions as Cloude-Pottier decomposition[30], three-component decomposition[31], four-component decomposition[32] etc. The commonly used PolSAR data statistical laws include Wishart distribution[33], K-distribution[34] and G-distribution[35].

Deep unsupervised learning PolSAR image classification aims at learning discriminative and effective polarimetric representations making full use of the unlabeled data with deep models, where DBN, DAE and GAN are the most extensively studied ones. It is noteworthy that for the application of these methods, a small number of labels are usually employed to fine-tune the deep networks after the unsupervised feature learning.

1.2.1 Deep Belief Network

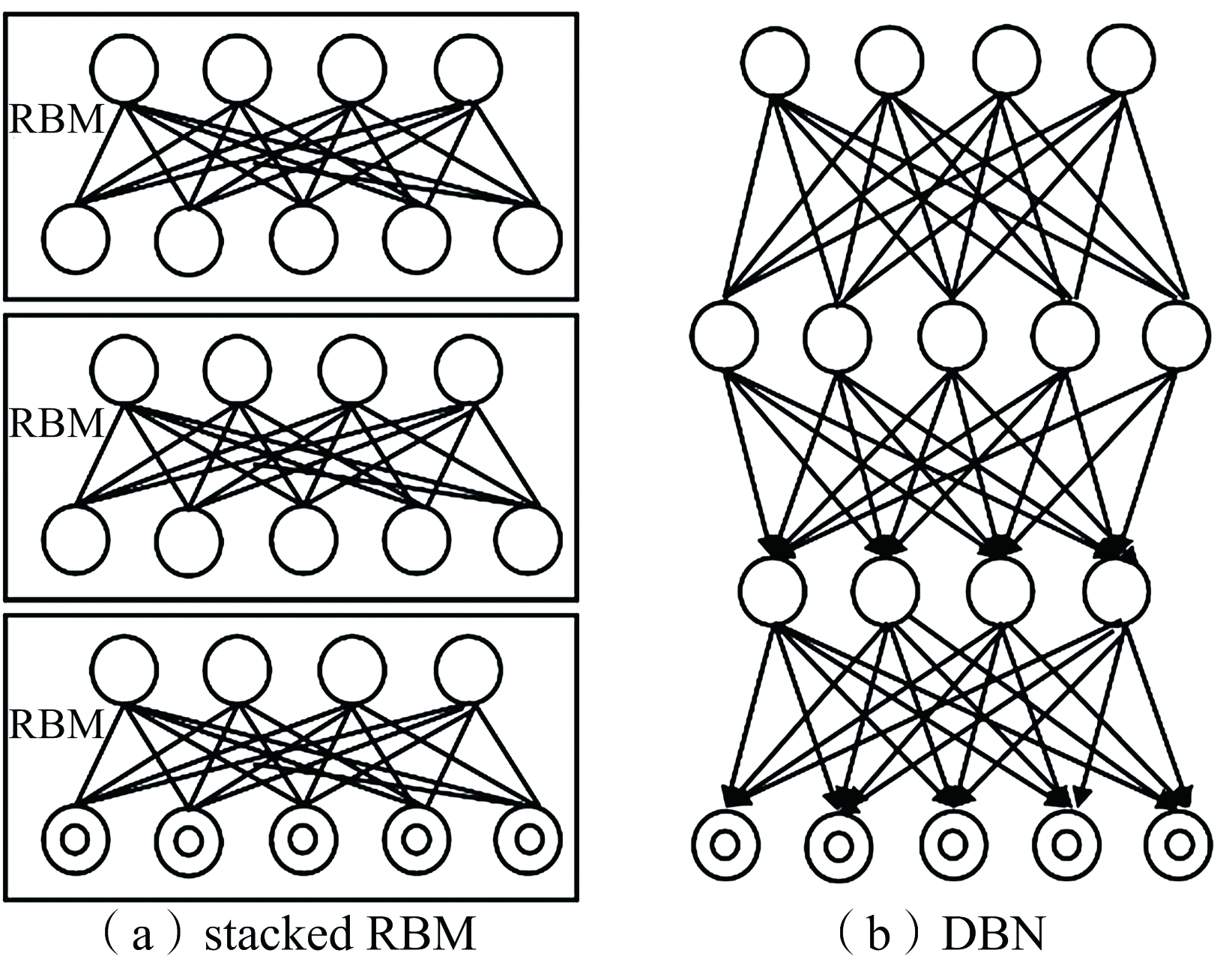

A restricted Boltzmann machine (RBM) is a generative stochastic neural network that can learn a probability distribution over its inputs[36]. RBMs are shallow, which consists of two-layer neural nets. Different from common neural networks, RBM does not allow intra-layer connections between nodes. However, the nodes are connected to each other across layers. Due to its shallow characteristics, RBM is not able to analyze complex data. Therefore, DBN was develo-ped to tackle this issue.

DBN is a deep architecture composed of multiple stacks of RBM. Figure 4 illustrates the respective structures of stacked RBM and DBN. Each RBM model performs a non-linear transformation on its input vectors and produces output which serves as input for the next RBM model in the sequence. As a generative model, DBNs can be used in either an unsupervised or a supervised scenario. Precisely, in feature learning, layer-by-layer pre-training is performed in an unsupervised manner and used with back propagation technique (i.e. gradient descent) to conduct classification by fine-tuning on a small labeled dataset.

Figure 4 Structure of stacked RBM and DBN

Inspired by the power of DBN, Lv et al. modeled PolSAR data by the general DBN, making full use of the numerous unlabeled PolSAR pixels[37]. With the fact that the covariance matrix generally follows the Wishart distribution, a preliminary exploration was made by Guo et al., where a Wishart RBM (WRBM) was proposed and each visible unit in the WRBM corresponds to one covariance matrix[38]. A further study was made by Liu et al., where the Wishart-Bernoulli RBM (WBRBM) was proposed for PolSAR data, but the defined energy function of WBRBM is stricter in mathematics[17]. All visible units in WBRBM correspond to one PolSAR data, rather than one visible unit. Moreover, the unique WBRBM stacked in the employed DBN is located in the lowest layer, which is much more reasonable. During the model training, the coherency matrix is used directly to represent a PolSAR pi-xel without any manual feature extraction, which makes the method simple and time-saving.

1.2.2 Deep Autoencoder

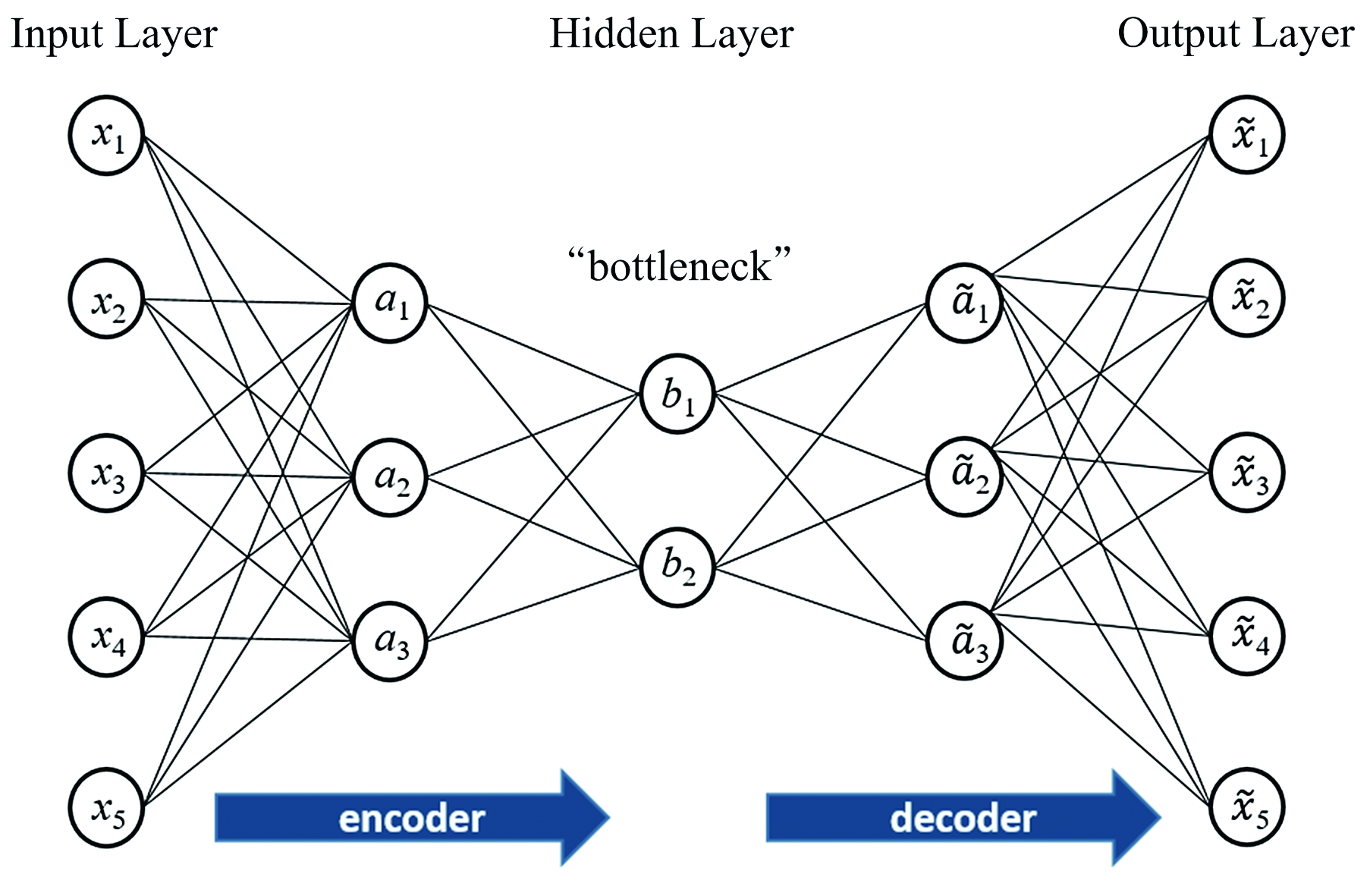

Autoencoder (AE) is an unsupervised learning technique where neural networks are leveraged for the task of representation learning. As shown in Figure 5, an autoencoder is composed of two main parts, i.e., an encoder that maps the input into the code, and a decoder that maps the code to a reconstruction of the input[39]. A bottleneck is designed to yield a compressed representation of the original input, where the feature dimension is typically reduced by training the deep network to ignore the insignificant part and keep only the most relevant aspects of the input.

Figure 5 Structure of deep autoencoder

Zhang et al. first introduced autoencoder to PolSAR image classification[40]. The authors stacked sparse autoencoders to a multilayer AE network and combined it with local spatial information, where superpixels are utilized to integrate contextual information of neighborhood and the AE network is used to learn the features for discriminating the class of each pixel. In recent years, more and more attention has been paid to integrating the distribution characteristics of PolSAR data into machine learning algorithms. Considering the complex Wishart distribution of the PolSAR coherency matrix, Xie et al.proposed a novel feature extraction model du-ring the construction of AE network, which is named as Wishart AE (WAE)[19]. It combines Wishart distance with the backpropagation algorithm through the training procedure of AE. Generally, features learned by WAE should be more suitable for the classification task. Wang et al. conducted a further study on the application of autoencoder in PolSAR image classification[41]. He presented a new feature learning network called the mixture AE (MAE), where both the Wishart distance and Euclidean distance are applied to evaluate the data error between the input and the output in the cost function. Chen et al.combined multilayer projective dictionary pair learning (MDPL) with SAE, where MDPL serves as the high-dimensional feature extractor for SAE[42].

1.2.3 Generative Adversarial Networks

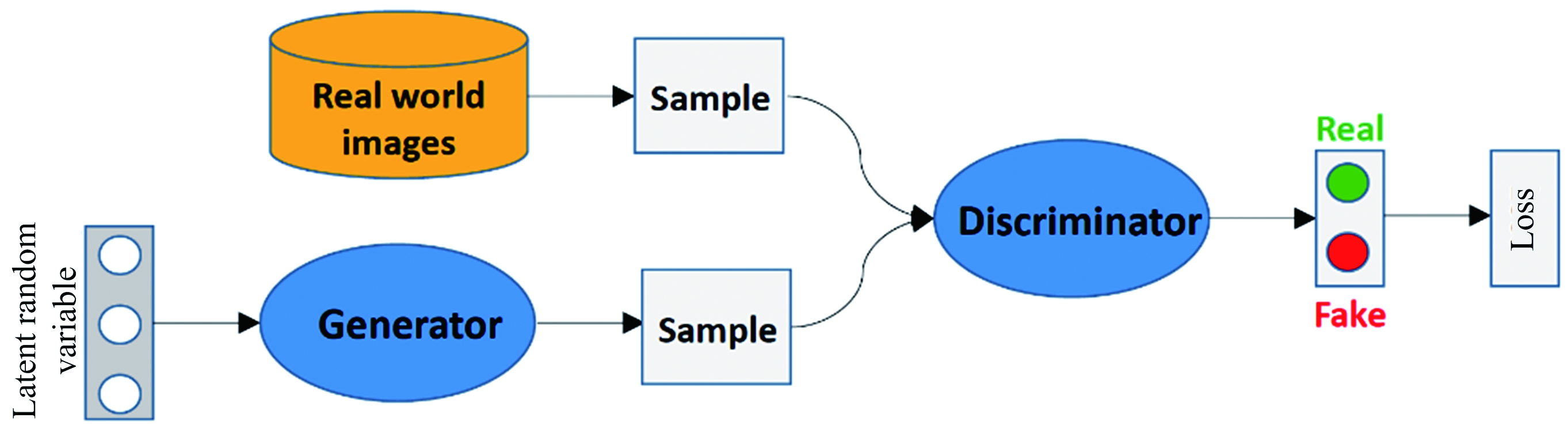

Generative adversarial network (GAN) is an unsupervised learning paradigm in machine learning which involves automatically discovering and learning the regularities or patterns in input data in a way where the model can be used to generate or output new examples that plausibly being drawn from the original dataset[43].

As shown in Figure 6, a GAN model is composed of two sub-models, i.e., the generator model which is trained to generate new examples, and the discriminator model which tries to classify examples as either real (from the domain) or fake (generated). After a proper training, fake data generated from the generator are indistinguishable from the real ones or share the same distribution with real ones, then these fake data are regarded as realistic-enough ones. The two models are trained together in an adversarial manner, until the discriminator model is fooled about half the probability, which means the generator model is generating plausible examples[43].

Figure 6 Illustration of a GAN

Based on GAN, Liu et al. presented a novel version named Task-Oriented GAN for PolSAR image classification and clustering[21]. Besides the two typical parts in GAN, i.e., generator and discriminator, the authors designed a third part called TaskNet, where the TaskNet is employed to accomplish a certain task. Two tasks, PolSAR image classification and clustering, are studied in the paper, where TaskNet acts as a classifier and a clusterer, respectively. Experimental results demonstrate that the proposed approach achieves good performance in both supervised and unsupervised settings, which is an effective extension of the traditional GAN.

Traditional unsupervised methods which are based on polarimetric statistical laws are fast and totally automatic, however the performance is usually unfavorable. The most recent deep unsupervised methods employ feature representation techniques to extract meaningful and strong indicators without any labels and then use limited labels to fine-tune the deep networks, which greatly relieves the reliance of performance on labels.

1.3 Deep Semisupervised Learning

As is well acknowledged, supervised methods can achieve promising classification perfor-mance, however, they rely heavily on large amounts of labels. Unsupervised methods are fast and simple. However, their results are usually unsatisfactory. Different from the above two branches of methods, semisupervised methods focus on improving classification performance and generalization ability by virtue of both labeled and unlabeled pixels[18]. The method is particularly suitable for image classification tasks with limited labels and massive unlabeled pixels.

The most extensively applied semisupervised learning methods include self-training, graph-based and co-training approaches. However, as for co-training models, it is difficult to obtain sufficient and conditionally independent views for PolSAR data. We find one semisupervised PolSAR classification method based on improved co-training in literature[44]. However, it is not based on deep models, which is out of the scope of this paper. Therefore, we do not analyze this category of methods here. The self-training and graph-based approaches have been synthetically integrated with diverse deep learning models, which will be introduced in following subsections respectively.

1.3.1 Deep Self-training Methods

In self-training process, a base learner is firstly trained on a small amount of labeled data and then applied to make predictions on the unlabeled data. Then, iteratively, the examples with most confident predictions are adopted as “pseudo labels” in subsequent iterations of the classifier, where the labeled training set is progressively expanded with these self-labeled examples[45]. Since the labels are usually insufficient for training, misclassifying a certain amount of unlabeled pi-xels is unavoidable. Therefore, the essential point for self-training is to design an unlabeled sample selection scheme that can boost the training process.

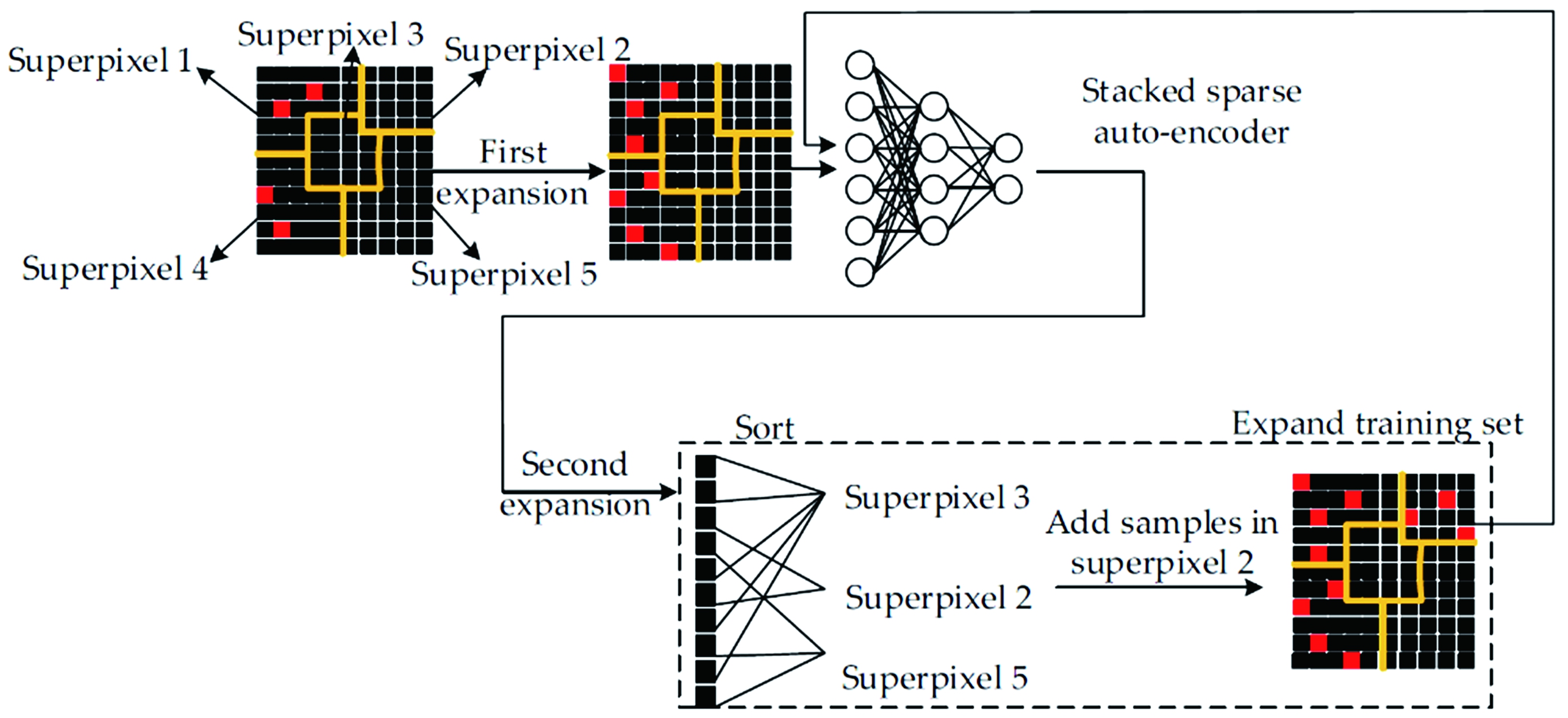

Li et al. proposed a self-training PolSAR image classification method based on superpixels and deep autoencoders, where the pipeline is shown in Figure 7[46]. First, the Pauli-RGB image of PolSAR data is over-segmented into superpixels to obtain a large number of homogeneous areas. Based on the superpixels, the method exploits two sample selection strategies to augment the training set. In the first expansion, the clustering assumption is used to directly propagate labels to samples in the same superpixel. In the second expansion, the candidate samples with the highest prediction confidence are first obtained and then the samples within the superpixel which contains the minimum number of samples are added to the training set. These two expansion strategies consider both the reliability and diversity of samples and are demonstrated to be effective.

Figure 7 Flow of sample section based on self-training and superpixels

Fang et al. utilizes K-means clustering approach to partition the whole PolSAR image into a number of clusters without any labels, where pseudo labels are generated based on the clusters[47]. Then both the labeled samples and pseudo labels are utilized to train a 3DCNN (3 dimensional CNN) network. The pseudo labels preserved the unsupervised representations that can be fused with the supervised features. Then, the fusion features are employed to improve the classification accuracy.

1.3.2 Deep Graph-based Models

Graph-based method is an important and re-presentative semisupervised approach which has a solid mathematical foundation and a favorable representation of inherent connections between pixels. In the graph-based image classification method, a graph is first constructed on an image, which consists of nodes representing pixels and weighted edges corresponding to the similarities between the pixels. Then, the class information spreads through edges from the labeled pixels to the unlabeled ones, which is mostly implemented using a well-formulated optimization algorithm[18]. The literatures[48-50] report semisupervised graph-based PolSAR image classification results with shallow machine learning methods.

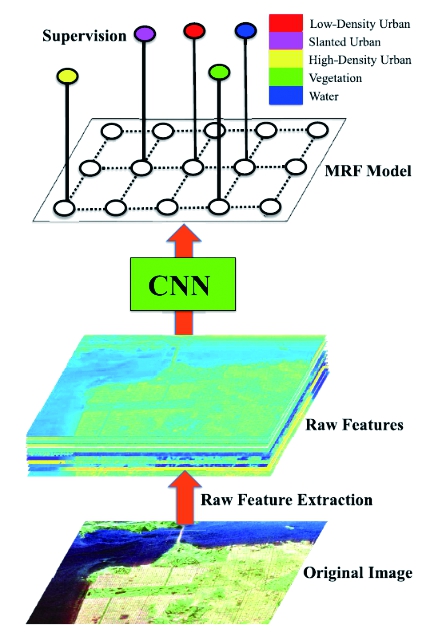

The incorporation of deep learning technique to graph-based semisupervision method further promotes the performance of PolSAR image classification. As shown in Figure 8, Bi et al.[18]established a graph on a given PolSAR image, which can be regarded as a Markov random field(MRF). The supervision imposes constraints from randomly selected human-labeled pixels and the CNN outputs pixel predictions to the MRF. The embedded pairwise smoothness prior in MRF encourages class label smoothness during the class label propagation[18]. An iterative optimization algorithm is designed for energy minimization of the semisupervision-based loss function by alternately updating network parameters and pixelwise class labels.

Figure 8 Overview of graph-based semisupervised deep learning model

Liu et al. proposed a graph convolutional network (GCN) by architecture search for PolSAR image classification[51]. This method focuses on reducing the parameters in GCN, which is achieved by a newly designed differentiable architecture search method. In addition, a new weighted mini-batch algorithm is developed to reduce the computing memory consumption and ensure the balance of sample distribution. Ren et al. exploited the rich spatial and spectral information contained in superpixel-based weighted graph structure and proposed a semisupervised approach, namely MEWGCN[52]. The proposed architecture derives a graph evolving module to perform automatic graph refinement through kernel diffusion process that combines initial graph structure with latent similarity measures. By employing proposed graph integration module, it learns the relative contribution of each scale and enables more effective feature extraction.

1.4 Deep Active Learning Methods

Active learning is an important branch of machine learning, whose key idea is that a machine learning algorithm can achieve better performance with fewer training labels if it is allowed to choose samples to be annotated[53]. Active learning is well-motivated in many machine learning problems, where unlabeled data may be abundant or easily obtained, but labels are difficult, time-consuming, or expensive to obtain.

Since the labels for PolSAR images is difficult to obtain, it is essential to investigate the connection and reaction between active learning and deep learning, in the hope of exploiting the high labeling efficiency of the former and taking advantage of the strong discriminative ability of the latter[54].

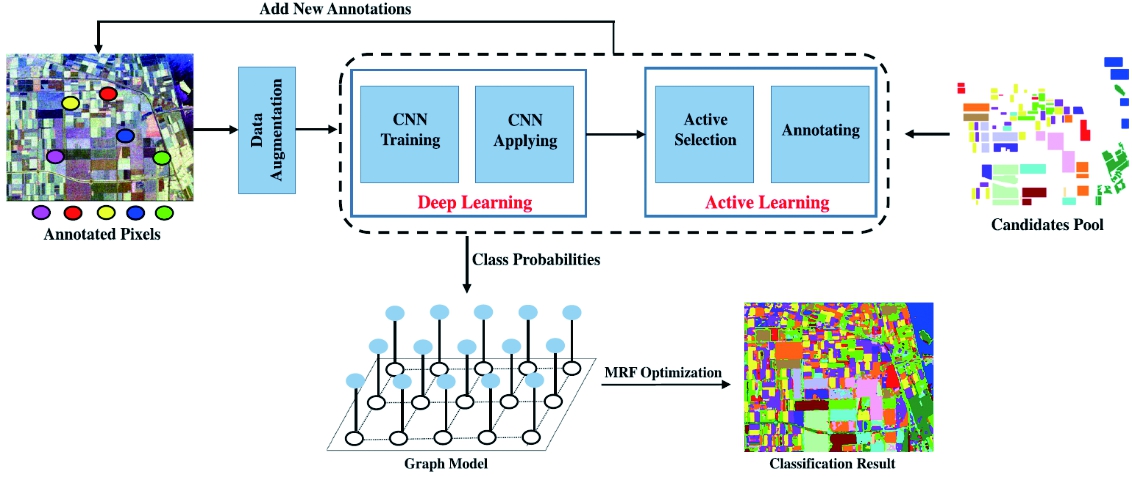

Motivated by the above analysis, Bi et al. brought forward an active deep learning approach for PolSAR image classification[54]. As shown in Figure 9, the implementation of the active deep learning is an iterative process. In each iteration, a CNN is first trained and applied based on current annotated pixels with data augmentation, then the active selection is conducted to request new annotations which act as input for the CNN retraining of next iteration. When the iteration stopping criterion is met, the class probabilities of CNN are output to a graph model built on the PolSAR image. By solving the MRF optimization problem which introduces label smoothness priors, the final classification result of the processed image is obtained[54].

Figure 9 Illustration of deep active learning mode

Liu et al. presented a novel active ensemble deep learning PolSAR image classification method. In this method, a deep learning model is first trained using limited training data. Then, the model’s snapshots near its convergence together serve as a committee to evaluate the importance of each sample instance. The most informative samples are added to the training set to further fine-tune the network until the resources run out or the model’s performance is satisfactory[55].

2 Current Challenges and Future Trends

Despite the above promising advances, there are still some challenges and opportunities confronting the PolSAR image classification task.

1) Label scarcity issue. Massive PolSAR images have been collected in recent years. Howe-ver, the classification of PolSAR images still lags far behind the data gathering, where the most significant barrier lies in the scarcity of labels. The lack of labels greatly hinders the perfor-mance improvement of machine learning algorithms, especially the deep learning based methods. Therefore, it is important to develop methods which require few labels yet have good gene-ralization ability.

2) Information fusion. As more and more remote sensing systems were deployed, remote sensing data with different modalities are being collected nowadays. Compared with single-source data, multi-modality data reveals more information of the observed targets. Therefore, remote sensing image interpretation fusing diverse data sources is of great interest.

3) Complexity of noise. The complexity of SAR noise brings great challenge for PolSAR image classification. It’s important to eliminate the influence of noise on image classification.

Few-shot machine learning is a promising option to solve the label scarcity issue. Meta-learning[56]and self-supervised learning[57] are representative few shot machine learning paradigms. We can find few works on these topics[58-59], however, the potential of few-shot learning is far from being fully explored, which is deserving more investigation.

The fusion of PolSAR data from different PolSAR sensors, and fusion of PolSAR and multispectral data were studied in literature[60-61], demonstrating the strong power of data fusion. Potential of fusion-based remote sensing data interpretation needs to be further delved into.

Except for noise removal prior to PolSAR image classification, removing noise while classi-fying images is a promising research direction. Recent work[5] leverages low-rank matrix factorization to simultaneously extract discriminative features and remove complex noises. More methods are expected to be developed in the future.

3 Conclusion

In this paper, we first introduce the characteristics of PolSAR and the significance of PolSAR image classification. Next, we review the extensively applied deep learning architectures and summarize their application in land cover classification with PolSAR data. From the analysis, we can conclude that deep learning techniques are particularly effective in automatic polarimetric feature extraction, which considerably elevates the classification performance. Finally, we analyze the current challenges and opportunities confronting PolSAR image classification task, and offers possible solutions to tackle them.

[1] WANG X, ZHANG L M, WANG N, et al. Joint Polarimetric-Adjacent Features Based on LCSR for PolSAR Image Classification [J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing,2021,14:6230-6243.

[2] SINCLAIR G. The Transmission and Reception of Elliptically Polarized Radar Waves[J]. Proceedings of the IRE,1950,38(2):148-151.

[3] ZYL J J V, ZEBKER H A, ELACHI C. Imaging Radar Polarization Signatures: Theory and Observation[J]. Radio Science,1987,22(4):529-543.

[4] HE C, HE B K, TU M X, et al. Fully Convolutional Networks and A Manifold Graph Embedding-Based Algorithm for PolSAR Image Classification[J]. Remote Sensing,2020,12(9):1467.

[5] BI H X, YAO J, WEI Z Q, et al. PolSAR Image Classification Based on Robust Low-Rank Feature Extraction and Markov Random Field[J]. IEEE Geoscience and Remote Sensing Letters,2020,PP(99):1-5.

[6] KONG J A, SWARTZ A A, YUEH H A,et al. Identification of Terrain Cover Using the Optimum Polarimetric Classifier[J]. Journal of Electromagnetic Waves and Applications,1988,2(2):171-194.

[7] TAO M L, ZHOU F, LIU Y, et al. Tensorial Independent Component Analysis-Based Feature Extraction for Polarimetric SAR Data Classification[J]. IEEE Trans on Geoscience and Remote Sensing,2015,53(5):2481-2495.

[8] POTTIER E, SAILLARD J. On Radar Polarization Target Decomposition Theorems with Application to Target Classification, by Using Neural Network Method[C]∥1991 Seventh International Conference on Antennas and Propagation, York:IET,1991:265-268.

[9] ANTROPOV O, RAUSTE Y, ASTOLA H, et al. Land Cover and Soil Type Mapping from Spaceborne PolSAR Data at L-Band with Probabilistic Neural Network[J]. IEEE Trans on Geoscience and Remote Sensing,2014,52(9):5256-5270.

[10] LARDEUX C, FRISON P L, TISON C, et al. Support Vector Machine for Multifrequency SAR Polarimetric Data Classification[J]. IEEE Trans on Geoscience and Remote Sensing,2009,47(12):4143-4152.

[11] MASJEDI A, VALADAN ZOEJ M J, MAGHSOUDI Y. Classification of Polarimetric SAR Images Based on Modeling Contextual Information and Using Texture Features[J]. IEEE Trans on Geoscience and Remote Sensing,2016,54(2):932-943.

[12] BI H X, XU L, CAO X Y, et al. Polarimetric SAR Image Semantic Segmentation with 3D Discrete Wavelet Transform and Markov Random Field[J]. IEEE Trans on Image Processing,2020,29:6601-6614.

[13] ERSAHIN K, CUMMING I G, WARD R K. Segmentation and Classification of Polarimetric SAR Data Using Spectral Graph Partitioning[J]. IEEE Trans on Geoscience and Remote Sensing,2010,48(1):164-174.

[14] BI H X, SUN J, XU Z B. Unsupervised PolSAR Image Classification Using Discriminative Clustering[J]. IEEE Trans on Geoscience and Remote Sensing,2017,55(6):3531-3544.

[15] ZHOU Y, WANG H P, XU F, et al. Polarimetric SAR Image Classification Using Deep Convolutional Neural Networks[J]. IEEE Geoscience and Remote Sensing Letters,2016,13(12):1935-1939.

[16] HINTON G E, SALAKHUTDINOV R R. Reducing the Dimensionality of Data with Neural Networks[J]. Science,2006,313(5786):504-507.

[17] LIU F, JIAO L C, HOU B, et al. POL-SAR Image Classification Based on Wishart DBN and Local Spatial Information[J]. IEEE Trans on Geoscience and Remote Sensing,2016,54(6):3292-3308.

[18] BI Haixia, SUN Jian, XU Zongben. A Graph-Based Semisupervised Deep Learning Model for PolSAR Image Classification[J]. IEEE Trans on Geoscience and Remote Sensing,2019,57(4):2116-2132.

[19] XIE W, JIAO L C, HOU B, et al. POLSAR Image Classification via Wishart-AE Model or Wishart-CAE Model[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing,2017,10(8):3604-3615.

[20] GENG J, WANG H Y, FAN J C, et al. SAR Image Classification via Deep Recurrent Encoding Neural Networks[J]. IEEE Trans on Geoscience and Remote Sensing,2018,56(4):2255-2269.

[21] LIU F, JIAO L, TANG X. Task-Oriented GAN for PolSAR Image Classification and Clustering[J]. IEEE Trans on Neural Networks and Learning Systems,2019,30(9):2707-2719.

[22] ZHANG Z M, WANG H P, XU F, et al. Complex-Valued Convolutional Neural Network and its Application in Polarimetric SAR Image Classification[J]. IEEE Trans on Geoscience and Remote Sensing,2017,55(12):7177-7188.

[23] LI Y Y, CHEN Y Q, LIU G Y, et al. A Novel Deep Fully Convolutional Network for PolSAR Image Classification[J]. Remote Sensing,2018,10(12):1984.

[24] DONG H W, ZOU B, ZHANG L M, et al. Automatic Design of CNNs via Differentiable Neural Architecture Search for PolSAR Image Classification[J]. IEEE Trans on Geoscience and Remote Sensing, 2020, 58(9):6362-6375.

[25] YANG C, HOU B, REN B, et al. CNN-Based Polarimetric Decomposition Feature Selection for PolSAR Image Classification[J]. IEEE Trans on Geoscience and Remote Sensing,2019,57(11):8796-8812.

[26] LIU P F, QIU X P, HUANG X J. Recurrent Neural Network for Text Classification with Multi-Task Learning[C]∥Proceedings of the Twenty-Fifth International Joint Conference on Artificial Intelligence,New York: AAAI Press,2016:2873-2879.

[27] SAK H, SENIOR A, BEAUFAYS F. Long Short-Term Memory Recurrent Neural Network Architectures for Large Scale Acoustic Modeling[C]∥Interspeech 2014,[S.l.]: ISCA,2014:1-5.

[28] NI J, ZHANG F, YIN Q, et al. Random Neighbor Pixel-Block-Based Deep Recurrent Learning for Polari-metric SAR Image Classification[J]. IEEE Trans on Geoscience and Remote Sensing,2021,59(9):7557-7569.

[29] WANG L, XU X, DONG H, et al. Exploring Con-volutional LSTM for PolSAR Image Classification[C]∥2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain: IEEE,2018:8452-8455.

[30] CLOUDE S R, POTTIER E. An Entropy Based Classification Scheme for Land Applications of Polarimetric SAR[J]. IEEE Trans on Geoscience and Remote Sensing,1997,35(1):68-78.

[31] FREEMAN A, DURDEN S L. A Three-Component Scattering Model for Polarimetric SAR Data[J]. IEEE Trans on Geoscience and Remote Sensing,1998,36(3):963-973.

[32] YAMAGUCHI Y, MORIYAMA T, ISHIDO M, et al. Four-Component Scattering Model for Polarimetric SAR Image Decomposition[J]. IEEE Trans on Geoscience and Remote Sensing,2005,43(8):1699-1706.

[33] LEE J S, GRUNES M R, AINSWORTH T L, et al. Unsupervised Classification Using Polarimetric Decomposition and the Complex Wishart Classifier[J]. IEEE Trans on Geoscience and Remote Sensing,1999,37(5):2249-2258.

[34] YUEH S H, KONG J A, JAO J K, et al. K-Distribution and Polarimetric Terrain Radar Clutter[J]. Journal of Electromagnetic Waves and Applications,1989,3(8):747-768.

[35] FREITAS C C, FRERY A C, CORREIA A H. The Polarimetric G Distribution for SAR Data Analysis[J]. Environmetrics,2005,16(1):13-31.

[36] SUTSKEVER I, HINTON G E, TAYLOR G W. The Recurrent Temporal Restricted Boltzmann Machine[J]. In Advances in Neural Information Processing Systems,2008:1601-1608.

[37] LV Q, DOU Y, NIU X, et al. Urban Land Use and Land Cover Classification Using Remotely Sensed SAR Data Through Deep Belief Networks[J]. Journal of Sensors,2015,2015:1-10.

[38] GUO Y H, WANG S, GAO C Q, et al. Wishart RBM Based DBN for Polarimetric Synthetic Radar Data Classification[C]∥2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy:IEEE, 2015:1841-1844.

[39] BALDI P. Autoencoders, Unsupervised Learning, and Deep Architectures[J]. Proceedings of ICML Workshop on Unsupervised and Transfer Learning,2012,27(7):37-49.

[40] ZHANG L, MA W P, ZHANG D. Stacked Sparse Autoencoder in PolSAR Data Classification Using Local Spatial Information[J]. IEEE Geoscience and Remote Sensing Letters,2016,13(9):1359-1363.

[41] WANG J L, HOU B, JIAO L C, et al. POL-SAR Image Classification Based on Modified Stacked Autoencoder Network and Data Distribution[J]. IEEE Trans on Geoscience and Remote Sensing,2020,58(3):1678-1695.

[42] CHEN Y Q, JIAO L C, LI Y Y, et al. Multilayer Projective Dictionary Pair Learning and Sparse Autoencoder for PolSAR Image Classification[J]. IEEE Trans on Geoscience and Remote Sensing,2017,55(12):6683-6694.

[43] GOODFELLOW I J, POUGET-ABADIE J P, MIRZA M, et al. Generative Adversarial Nets[C]∥Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal Canada:MIT,2014:2672-2680.

[44] HUA W Q, WANG S, LIU H Y, et al. Semisupervised PolSAR Image Classification Based on Improved Cotraining[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing,2017,10(11):4971-4986.

[45] LI M, ZHOU Z H. Setred: Self-Training with Editing[M]. Advances in Knowledge Discovery and Data Mining, Berlin, Heidelberg: Springer Berlin Heidelberg,2005:611-621.

[46] LI Y Y, XING R T, JIAO L C, et al. Semi-Supervised PolSAR Image Classification Based on Self-Training and Superpixels[J]. Remote Sensing,2019,11(16):1933.

[47] FANG Z, ZHANG G, DAI Q J, et al. Semisupervised Deep Convolutional Neural Networks Using Pseudo Labels for PolSAR Image Classification[J]. IEEE Geoscience and Remote Sensing Letters,2020,PP(99):1-5.

[48] WEI B H, YU J, WANG C, et al. PolSAR Image Classification Using a Semi-Supervised Classifier Based on Hypergraph Learning[J]. Remote Sensing Letters,2014,5(4):386-395.

[49] LIU H Y, WANG Y K, YANG S Y, et al. Large Polarimetric SAR Data Semi-Supervised Classification with Spatial-Anchor Graph[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing,2016,9(4):1439-1458.

[50] LIU H Y, YANG S Y, GOU S P, et al. Fast Classification for Large Polarimetric SAR Data Based on Refined Spatial-Anchor Graph[J]. IEEE Geoscience and Remote Sensing Letters,2017,14(9):1589-1593.

[51] LIU H Y, XU D R, ZHU T W, et al. Graph Convolutional Networks by Architecture Search for PolSAR Image Classification[J]. Remote Sensing,2021,13(7):1404.

[52] REN S J, ZHOU F. Semi-Supervised Classification for PolSAR Data with Multi-Scale Evolving Weighted Graph Convolutional Network[J]. IEEE Journal of Selected Topics in Applied Earth Observations and Remote Sensing,2021,14:2911-2927.

[53] SETTLES B. Active Learning Literature Survey[J].Machine Learning,2010,15(2):201-221.

[54] BI H X, XU F, WEI Z Q, et al. An Active Deep Learning Approach for Minimally Supervised PolSAR Image Classification[J]. IEEE Trans on Geoscience and Remote Sensing,2019,57(11):9378-9395.

[55] LIU S J, LUO H W, SHI Q. Active Ensemble Deep Learning for Polarimetric Synthetic Aperture Radar Image Classification[J]. IEEE Geoscience and Remote Sensing Letters,2021,18(9):1580-1584.

[56] VILALTA R, DRISSI Y. A Perspective View and Survey of Meta-Learning[J]. Artificial Intelligence Review,2002,18(2):77-95.

[57] CHEN T, KORNBLITH S, NOROUZI M, et al. A Simple Framework for Contrastive Learning of Visual Representations[C]∥International Conference on Machine Learning,[S.l.]:[s.n.],2020:1597-1607.

[58] QIN X L, YANG J, ZHAO L L, et al. A Novel Deep Forest-Based Active Transfer Learning Method for PolSAR Images[J]. Remote Sensing,2020,12(17):2755.

[59] ZHANG L M, ZHANG S Y, ZOU B, et al. Unsupervised Deep Representation Learning and Few-Shot Classification of PolSAR Images[J]. IEEE Trans on Geoscience and Remote Sensing,2020,PP(99):1-16.

[60] DONG H, XU X, YANG R, et al. Component Ratio-Based Distances for Cross-Source PolSAR Image Classification[J]. IEEE Geoscience and Remote Sensing Letters,2020,17(5):824-828.

[61] WANG J, CHEN J Q, WANG Q W. Fusion of POLSAR and Multispectral Satellite Images: A New Insight for Image Fusion[C]∥2020 IEEE International Conference on Computational Electromagnetics (ICCEM),Singapore:IEEE,2020:83-84.